The Business Intelligence and Analytics journey begins in descriptive analytics. This is a well established discipline with many, many, competent players at every price point. They have the ability automate and streamline disseminating information about what happened. Then the human brain power and domain knowledge is typically leveraged for the *why* of it, and then consequently what actions to take because of it.

The next step in the journey is to leverage the descriptive of the past to determine what *will* happen. As Big Data technology accelerates rapidly, allowing the ingestion, storage, and analysis of large varied sets of data easier with cloud options and new tech, reducing the barrier to entry – MANY debates ensue, including:

- Causation vs. Correlation

- The sterile application of statistics over domain knowledge and real world feet-on-the street experience

- The moral and legal issues surrounding what data can be leveraged (think profiling)

And then you get into long winded debates about what tools and resources should be leveraged:

- Open source options in pure Data Science and increasingly more scarce and expensive resources to wield them. Then splintering off within that debates column are arugments about which models, regression techniques etc. are most appropriate. Usually done by folks well versed in data science but lacking in deep domain knowledge. (The movie Sully was an excellent illustration).

- The investment in long established predictive analytics solutions (SPSS, SAS, etc)

- The use of automated tools that choose models for the user and how accurate those may be – dependent on industry, if you’re not saving lives or in a high precision industry – they’re probably just fine. (Perhaps you caught today’s webinar on cognitive assistance in Data Science? https://www.ibm.com/analytics/us/en/events/machine-learning/)

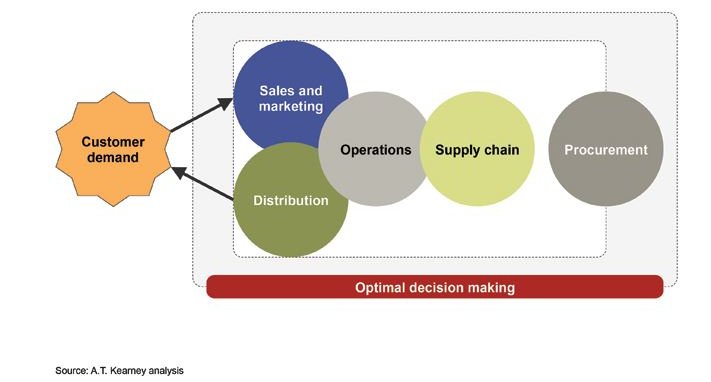

Where the money to be saved / made is in the *prescriptive* side of the equation. For example, what if you could leverage volume discounts, net 2/10 discounts, and surplus purchases to reduce COGS? Or alternatively, just-in-time inventory management, seemingly counter intuitive mid-shift machine changes that increase output and reduce cost per piece. or perhaps the perfect timing of promotions to move products with reduced COGS at a higher margin? Hot day coming up? Maybe that bulk buy of cheap beer would net some extra profit?

What if you could run through 30,000 different scenarios between purchasing, manufacturing, shipping, employee hours, and promotions to eek out every bit of savings and efficiency – maximizing every investment, reimbursement, and discount available? Pre-technology this was done through trial and error, studies, pilots, gut decisions, diving rods, etc. What if you could use massive amounts of data to speed through and get to the optimal solution with very little investment?

This is where your data, and public data sets available can increase your bottom line, take advantages of bulk purchases or just-in-time by predicting demand, adjusting manufacturing schedules, and supply chain options. Can you 100% accurately predict without an occasional misstep – meh, unlikely. Though I will say with rudimentary tools, unsophisticated models, I personally was able to forecast $1 million per month customer residuals within about $10K. So I can’t imagine with the host of tools available today you’d be too far off (barring election forecasts, sorry Nate Silver that was a rough lesson in human reported metrics).

However, the gains to be made with better decision support being able to net positively even with some missteps – that’s pretty much a guarantee.

I will forewarn, when you take this journey you may find yourself steeped in opensource language holy wars and theoretical debates, anyone claiming to have the bullet proof system needs to “hang their pants over a telephone wire” because those suckers are on fire! They’re lying to you or themselves ….. but take some guidance, weigh the options, and think *pragmatic* progress over perfection. Look for flexibility to adjust and do not forget the costs of your talent resources to execute, sometimes hiring the super star data scientist may negate a chunk of savings available in a slightly less accurate forecast.

I’m always open to chat, brainstorm, offer what I do know, or play devil’s advocate on what you’ve been told, gratis.

Why Aviana?

Our story: https://vimeo.com/106965002

Aviana has documented over $1.8 billion in ROI for their clients. The overwhelming majority of Aviana clients are repeat clients because we keep working until our client is successful. We give our best advice and service regardless of profit.